Nvidia's AI Dominance: What's Next for GPU Lifecycles?

The AI Gold Rush: Are GPUs Really About to Become Digital Dinosaurs?

Okay, let's dive into the question that's been buzzing around the AI community like a swarm of excited bees: how long before a GPU depreciates? It’s a question loaded with implications, not just for our wallets, but for the entire trajectory of AI development itself. We're talking about the engines that power this revolution, and the thought of them becoming obsolete is… well, let's just say it's enough to make any tech enthusiast pause. When I first heard this question being seriously debated, I felt a strange mix of excitement and trepidation, a feeling I hadn't felt since the early days of quantum computing.

The heart of the matter, as I see it, isn’t if GPUs will depreciate, but how quickly and what comes next. Think about it: we're in the middle of an AI arms race, with companies and researchers pushing the boundaries of what's possible at an absolutely relentless pace. New architectures, new algorithms, new approaches to machine learning are emerging almost daily. And the hardware? It’s struggling to keep up, honestly, it’s like trying to build a road fast enough to keep pace with a rocket.

The Ever-Accelerating Cycle

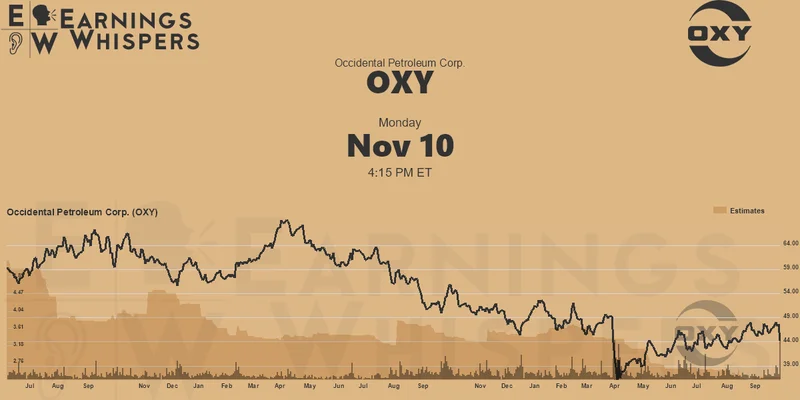

The old model of hardware depreciation, where a piece of tech slowly loses value over years, may be completely obsolete. We're entering an era of accelerated obsolescence, where the cutting edge becomes the blunt edge in a matter of months. Nvidia's stock swings are a perfect example. As one article noted, AI stocks were at the "center of the action," making it clear that the entire market's direction can be influenced by the performance of a single chip company. And with Nvidia's profit report coming up, the stakes are incredibly high; a miss could send shockwaves through the entire industry. As Wall Street scrambles back from a big morning loss as Nvidia and bitcoin swing shows, the market is very sensitive to news regarding AI and related technologies.

But here's where I see the real opportunity. This rapid depreciation isn't a sign of doom; it's a sign of progress, a sign of relentless innovation. It's like the Moore's Law of the AI era, but on steroids. Remember when everyone thought the printing press would destroy the world? Instead, it democratized knowledge and ushered in an era of unprecedented progress! Are we on the verge of a similar paradigm shift? Absolutely.

The key is to adapt, to anticipate, and to invest in the next wave of computing. What will that look like? Quantum computing? Neuromorphic chips? Entirely new architectures we haven't even dreamed of yet? The possibilities are endless, and that’s what gets me so fired up! I think the real question is, will we be ready to embrace these changes? Will we be able to retrain our workforce, retool our infrastructure, and reimagine our approach to AI development?

One thing I'm particularly excited about is the potential for more sustainable AI. The current generation of GPUs is power-hungry and resource-intensive. But what if we could develop AI systems that are not only more powerful but also more energy-efficient? Now that's a goal worth striving for, and it’s a goal that I believe is within our reach. What if new hardware architectures could learn with the same energy efficiency as a human brain?

It's not just about the technology, though. It's about the people. It's about the researchers, the engineers, the entrepreneurs, and the visionaries who are pushing the boundaries of what's possible. It's about creating a community that supports innovation, that fosters collaboration, and that embraces the challenges ahead.

And speaking of community, I've been seeing some incredibly insightful comments online. One person on Reddit put it perfectly: "The depreciation of GPUs is just the price we pay for progress. It's like saying that the cost of developing new vaccines is too high. We can't let short-term financial concerns hold us back from pursuing breakthroughs that could benefit all of humanity." I couldn't agree more.

Of course, with great power comes great responsibility. As we develop more powerful AI systems, we need to be mindful of the ethical implications. We need to ensure that these technologies are used for good, that they are accessible to everyone, and that they do not exacerbate existing inequalities. This is a conversation we need to be having, and it's a conversation that needs to involve everyone.

The Dawn of a New Intelligence

Here's the truth: the depreciation of GPUs isn't a problem; it's an opportunity. It's a chance to build a better future, a future where AI is more powerful, more sustainable, and more accessible than ever before. It’s a future I am incredibly excited to be a part of.

-

Warren Buffett's OXY Stock Play: The Latest Drama, Buffett's Angle, and Why You Shouldn't Believe the Hype

Solet'sgetthisstraight.Occide...

-

The Great Up-Leveling: What's Happening Now and How We Step Up

Haveyoueverfeltlikeyou'redri...

-

The Business of Plasma Donation: How the Process Works and Who the Key Players Are

Theterm"plasma"suffersfromas...

-

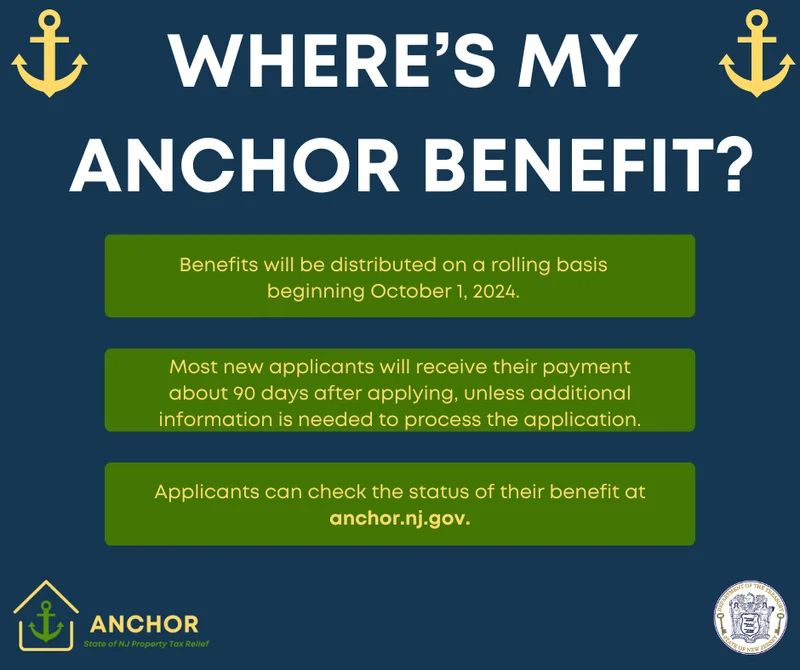

NJ's ANCHOR Program: A Blueprint for Tax Relief, Your 2024 Payment, and What Comes Next

NewJersey'sANCHORProgramIsn't...

-

The Future of Auto Parts: How to Find Any Part Instantly and What Comes Next

Walkintoany`autoparts`store—a...

- Search

- Recently Published

-

- Ethereum's Fusaka Upgrade: The 'Future' They're Selling vs. The Reality We'll Get

- TransUnion: Reimagining Credit's Future and Your Financial Journey

- Solana Acquires Vector.fun: Coinbase's "Everything Exchange" Strategy

- Scott Kirby at American Airlines: Decoding the Future of United Through His Past

- Nvidia Stock: Price Movements and Earnings Outlook

- Bitcoin: Price Today & Market Outlook

- Maxi Doge: Presale Buzz and Crypto News – What We Know

- Fear and Greed Index Plunges: Crypto Market Reactions and What We Know

- Pizza's Next Chapter: Innovation and Our Changing Tastes

- Firo Hard Fork: Likely Price Impact and What We Know

- Tag list

-

- Blockchain (11)

- Decentralization (5)

- Smart Contracts (4)

- Cryptocurrency (26)

- DeFi (5)

- Bitcoin (31)

- Trump (5)

- Ethereum (8)

- Pudgy Penguins (5)

- NFT (5)

- Solana (6)

- cryptocurrency (6)

- XRP (3)

- Airdrop (3)

- MicroStrategy (3)

- Stablecoin (3)

- Digital Assets (3)

- bitcoin (3)

- PENGU (3)

- Plasma (5)

- Zcash (7)

- Aster (5)

- investment advisor (4)

- crypto exchange binance (3)

- SX Network (3)